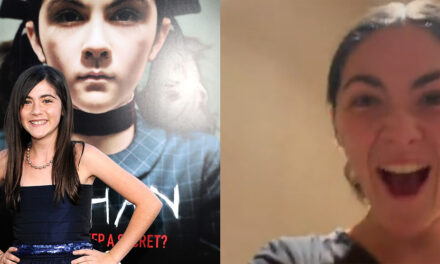

Who’s cry? | Photo by Chris Welch/ The Verge

One of the stranger applications of deepfakes — AI technology used to manipulate audiovisual content — is the audio deepfake scam. Hackers help machine learning to clone someone’s voice and then combine that utter clone with social engineering proficiencies to convince people to move money where it shouldn’t be. Such victimizes have been successful in the past, but how good are the voice clones being used in these attacks? We’ve never actually examined the audio from a deepfake defraud — til now.

Security consulting firm NISOS has released a report analyzing one such attempted hoax, and shared the audio with Motherboard. The excerpt below is part of a voicemail sent to an employee at an unnamed tech firm, in which a utter that is just like the…

Read more: theverge.com

Recent Comments