OpenAI’s recent strange yet fascinating creation is DALL-E, which by way of premature summing-up might be called ” GPT-3 for likeness .” It makes illustrations, photos, renders or whatever method you prefer, of anything you can intelligibly describe, from” a “cat-o-nine-tail” wearing a bow tie” to” a daikon radish in a tutu treading a puppy .” But don’t write stock photography and illustration’s eulogies just yet.

As normal, OpenAI’s description of its fabrication is quite understandable and not overly technological. But it bears a bit of contextualizing.

What researchers created with GPT-3 was an AI that, given a prompt, would attempt to generate a plausible edition of what it describes. So if you say ” a storey about a child who discovers a hag in the lumbers ,” it will try to write one — and if you hit the button again, it will write it again, differently. And again, and again, and again.

Some of these endeavors will be better than others; really, some are likely to be just coherent while others may be nearly indistinguishable from something written by a human. But it doesn’t output debris or serious grammatical errors, which procreates it suitable for a variety of assignment, as startups and researchers are exploring right now.

DALL-E( a mix of Dali and WALL-E) takes these principles one further. Turning text into images has been done for years by AI agents, with varying but steadily increasing success. In this case the negotiator uses the language understanding and context provided by GPT-3 and its underlying organization to create a reasonable idol that accords a prompt.

As OpenAI gives it 😛 TAGEND

GPT-3 showed that language can be used to instruct a large neural network to perform a variety of text generation tasks. Image GPT showed that the same type of neural network can also be used to generate idols with high fidelity. We extend these acquires are demonstrating that controlling visual notions through language is now within reach.

What they mean is that an persona generator of this type can be operated naturally, simply by telling it what to do. Sure, you could dig into its bowels and find the token that represents color, and decipher its pathways so you can activate and to be amended, the mode you might stimulate the neurons of a real brain. But you wouldn’t do that when asking your staff illustrator to stimulate something blue rather than lettuce. You just say,” a blue-blooded auto” instead of” a dark-green auto” and they get it.

So it is with DALL-E, which understands these inspires and rarely miscarries in any serious style, although it must be said that even when looking at the best of a hundred or thousands and thousands of attempts, countless idols it generates are more than a little … off. Of which later.

In the OpenAI post, health researchers dedicate copious interactive examples of how the system can be told to do minor deviations of the same idea, and the result is plausible and often fairly good. The truth is these systems can be very fragile, as they admit DALL-E is in some ways, and saying ” a light-green skin pocketbook mold like a pentagon” may render what’s expected but” a off-color suede pocketbook determined like a pentagon” might display nightmare oil. Why? It’s hard to say, given the black-box nature of these systems.

Image Credits: OpenAI

But DALL-E is remarkably robust to such changes, and reliably grows pretty much whatever you ask for. A torus of guacamole, a arena of zebra; a large blue block sitting on a small red block; a front view of a glad capybara, an isometric belief of a sad capybara; and so on and so forth. You can play with all the lessons at the post.

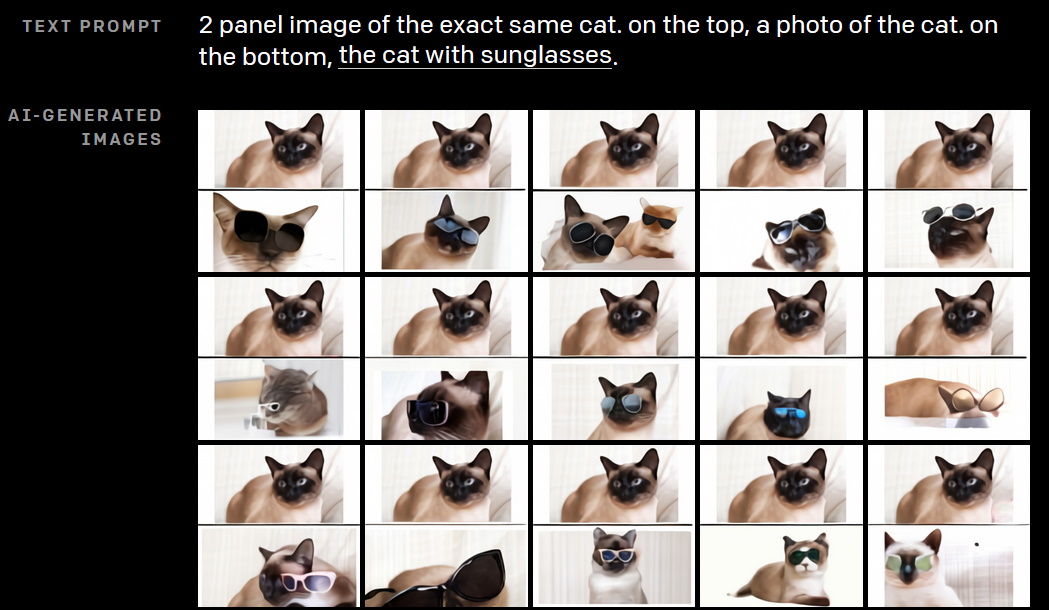

It too exhibited some unintended but handy demeanors, exerting intuitive logic to understand entreaties like questioning it to see various sketches of the same( non-existent) “cat-o-nine-tail”, with the original on top and the sketch on the bottom. No special coding now:” We did not anticipate that this capability would emerge, and fixed no modifications to the neural network or teaching procedure to encourage it .” This is fine.

Here are a few practices GPT-3 can go wrong

Interestingly, another brand-new system from OpenAI, CLIP, was used in conjunction with DALL-E to understand and rank the images in question, though it’s a little bit more technological and harder to understand. You can read about CLIP here.

The inferences of this capability are many and various, so much better so that I won’t attempt to go into them now. Even OpenAI punts 😛 TAGEND

In the future, we plan to analyze how mannequins like DALL* E relate to societal issues like financial impact on sure-fire work processes and professings, the potential for bias in the simulate productions, and the longer term ethical challenges implied by this technology.

Right now, like GPT-3, this technology is amazing and hitherto difficult to make clear prognosis regarding.

Notably, very little of what it grows seems absolutely “final” — that is to say, I couldn’t tell it to make a lead image for anything I’ve written lately and expect it to put out something I could use without revision. Even a brief inspection discloses all kinds of AI weirdness( Janelle Shane’s specialty ), and while these bumpy perimeters is obviously be buffed off in time, it’s far from safe, the nature GPT-3 text can’t time be sent out unedited in place of human writing.

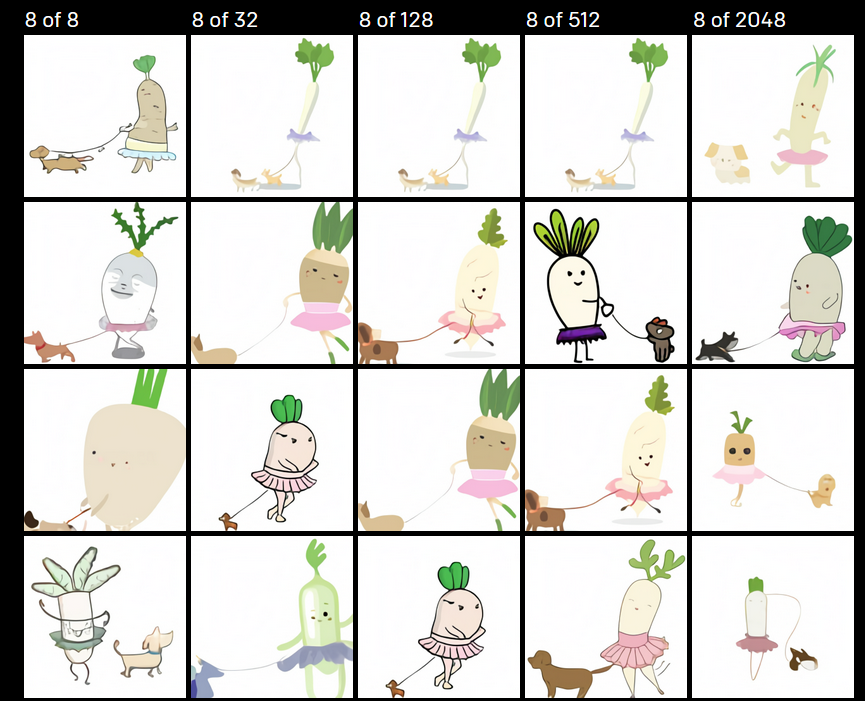

It helps to generate many and pick the top few, as the following collection proves 😛 TAGEND

The top eight out of a total of X generated, with X increasing to the realization of the rights. Image Credits: OpenAI

That’s not to detract from OpenAI’s accomplishment now. This is fabulously interesting and powerful employ, and like the company’s other projects it will no doubt develop into something even more fabulous and interesting before long.

OthersideAI heightens $2.6 M to let GPT-3 write your emails for you

Read more: feedproxy.google.com

Recent Comments